For almost as long as computers have existed, engineers have worked to develop better ways for humans to interact with technology.

Until the most recent iterations of the touchscreen, most interfaces between humans and computers were a compromise between ease of use and granular control. The touchscreen offered a much more natural and intuitive method of control that anyone can pick up easily.

Touchscreens are not ideal for every application, however. They add cost to inexpensive applications, they are awkward to use in smaller devices, they are a weak point in outside installations (challenged by both the environment and by vandals), they can be a threat to safety, and of course, they need physical proximity for interaction. For these and similar applications designers need a way to intuitively control technology without the drawbacks. Voice recognition and control could be the perfect solution.

There is nothing new about the idea of controlling technology by voice alone. There have been many attempts to harness the power of speech which have achieved varying degrees of success. That is until fairly recently, when big leaps in speech recognition algorithms, combined with large scale computing power, have come together to enable designers to create technology that responds quickly and precisely to a huge variety of commands.

Early speech systems

The first attempt at creating a computer that could understand human speech was made in 1952 by Bell Laboratories. The system, named Audrey, was very basic and could only understand a few numbers that were spoken by specific people.

Speech recognition improved incrementally until the 1970s, when the US Department of Defense set up the Darpa (Defense Advanced Research Projects Agency) Speech Understanding Research (SUR) program. This huge project ran from 1971 to 1976.

The research eventually led to the development of Carnegie Mellon’s ‘Harpy’ system, which had a vocabulary of over 1,000 words. Rather than being simply an evolution of earlier systems, Harpy employed a ground-breaking search approach that was much more efficient than previous search systems.

Beam search (a heuristic search algorithm that explores a graph by expanding the most promising node in a limited set – an optimisation of best-first search that reduces its memory requirements) could predict the finite‑state network of possible sentences.

The 1980s provided a huge leap in speech recognition through the development of the Hidden Markov Model (HMM), a statistical modelling technique that could predict whether individual sounds could be words. This expanded the number of words that a computer could learn to several thousand.

The next major technological advance came in 1997, when the first system that could understand natural speech was launched; Dragon Naturally Speaking could process around 100 words per minute.

These breakthroughs provided the basic building blocks for speech recognition. What was needed to bring the technology into the mainstream was low cost, widely available, large-scale computing that would provide real-time responses for control.

This came more recently from two industry giants, Google and Apple.

In 2011, Apple launched Siri, the intelligent digital personal assistant on the iPhone 4S. Siri added a level of user control, allowing users to call friends, dictate messages or play music using voice control.

In 2012, originally developed for Apple’s iPhone, Google’s Voice Search app took advantage of the phone’s inherent connectivity to compare search phrases against the host of data from user searches that the company had accumulated in the cloud. The ability to compare with previous searches saw a huge jump in the level of accuracy, as it allowed artificial intelligence (AI) to better understand the context of the search.

In reality, Google Search and Siri were a secondary means of control after the touchscreen. Amazon took the concept to another level with its Echo, which combined a digital personal assistant with a speaker, and dropped the touchscreen.

With the success of intelligent digital personal assistants, more designers and hobbyists consider speech recognition and control as an option for their next design. Industry analyst ABI Research estimates that 120 million voice‑enabled devices will ship annually by 2021 and voice control will be a key user interface for the smart home.

Incorporating voice control

For designers that wish to incorporate voice control into a product, there are a few things to consider.

It is possible to build the whole system from the ground up and for it to operate offline, but that would only offer relatively limited functionality. The speech recognition algorithms and libraries would be limited by memory and incorporating new commands would be difficult. However, it is possible.

PocketSphinx has been developed for handheld Android devices, and the latest version can be used as a standalone app on any device running Android Wear 2.0.

Most developers will want to offer a more comprehensive instruction set, and this will require a connection to the cloud. Most large cloud providers, including Amazon and Google, offer speech tools as a service, and these can be relatively inexpensive to incorporate into designs. The choice of service will depend on a project’s priorities.

IBM, for instance, offers a speech service as part of its Watson cloud platform. This may be of interest to those who want to take advantage of IBM’s analytical expertise, rather than wanting a more generalised consumer‑focused platform.

Both Amazon and Google offer a platform that is tailored for the general home automation market. Both have an ecosystem that includes some of the most respected developers of home automation products. Amazon’s partners include Nexia, Philips Hue, Cree, Osram, Belkin and Samsung devices. Google shares many of the same partners.

To help incorporate Amazon and Google voice control into products, the companies offer access to their platforms for a relatively low cost. Amazon’s Alexa Voice Service (AVS) allows developers to integrate Alexa directly, and provides a full suite of resources including application programming interfaces (APIs), software development kits (SDKs), hardware development kits and documentation.

Google also allows developers to use the functionality of the company’s Google Assistant intelligent digital personal assistant through an SDK. This provides two integration options – the Google Assistant library and the Google Assistant gRPC API.

The library is written in Python and is supported on devices with Linux-Arm v7l and Linux-x86_64 architectures (such as Raspberry Pi 3 B and Ubuntu desktops). The library offers a high level, event-based API that is easy to extend.

The gRPC API gives access to a low level API. Bindings for this can be generated for languages like Node.js, Go, C++ and Java for all platforms that support gRPC.

For those who would rather avoid these services or use an open source interface, other options are available. For example, Mycroft is a free and open source intelligent personal assistant for Linux-based operating systems that uses a natural language user interface. The modular application allows users to change its components.

Jasper is another open source option that allows developers to add new functionality to the software.

Hardware considerations

As for hardware, the most likely host will be a single board computer, like a Raspberry Pi.

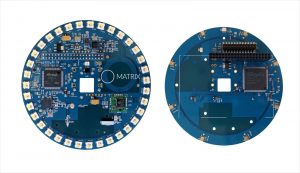

There are some boards that have been designed specifically for voice control applications, such as Matrix Creator board, which can work as a Raspberry Pi Hat, or as a standalone unit (pictured, left). The board has an array of seven MEMs microphones to give a 360° listening field and is powered by an Arm Cortex M3 with 64Mbit SDRAM. A variety of sensors is also incorporated.

Off-the-shelf services that can be used with the board include, Amazon AVS, Google Speech API and Houndify. Multiple microphones, often arranged in an array, can capture a more accurate representation of a sound. If the technology to stitch the sound from the microphones together is not built into the array, it may require extra design work and processing power. Noise reduction technology is also extremely important to ensure instructions are received accurately.

The holy grail for developers is to find the most intuitive interface possible between humans and machines. Voice control is now at a stage that the process feels almost as natural as talking to another human.

Pioneered by some of the biggest names in the industry, the technology is now available for use in developers’ own designs with much of the hard processing done in the cloud.

There are also specialised boards, tools and services to simplify the process dramatically, meaning voice control can be added to almost any project.

Source from:electronicsweekly

영어

영어  중국어

중국어  독일어

독일어  한국어

한국어  일본어

일본어  Farsi

Farsi  Portuguese

Portuguese  Russian

Russian  스페인어

스페인어